TDT4215: Web-intelligence

# Curriculum

As of spring 2016 the curriculum consists of:

- __A Semantic Web Primer__, chapter 1-5, 174 pages

- __Sentiment Analysis and Opinion Mining__, chapter 1-5, 90 pages

- __Recommender Systems__, chapter 1-3, and 7, 103 pages

- _Kreutzer & Witte:_ [Opinion Mining Using SentiWordNet](http://stp.lingfil.uu.se/~santinim/sais/Ass1_Essays/Neele_Julia_SentiWordNet_V01.pdf)

- _Turney:_ [Thumbs Up or Thumbs Down? Semantic Orientation Applied to Unsupervised Classification of Reviews](http://www.aclweb.org/anthology/P02-1053.pdf)

- _Liu, Dolan, & Pedersen:_ [Personalized News Recommendation Based on Click Behavior](http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.308.3087&rep=rep1&type=pdf)

# Semantic Web

## The Semantic Web Vision / Motivation

The Semantic Web is an extension of the normal Web, that promotes common data formats and exchange protocols. The point of Semantic Web is to make the internet machine readable. It is not a matter of artificial intelligence; if the ultimate goal of AI is to build an intelligent agent exhibiting human-level intelligence (and higher), the goal of the Semantic Web is to assist human users in their day-to-day online activities.

Semantic Web uses languages specifically designed for data:

- Resource Description Framework (RDF)

- Web Ontology Language (OWL)

- Extensive Markup Language (XML)

Together these languages can describe arbitrary things like people, meetings or airplane parts.

## Ontology

An ontology is a formal and explicit definition of types, properties, functions, and relationship between the entities, which result in an abstract model of some phenomena in the world. Typically an ontology consist of classes arranged in a hierarchy. There are several languages that can describe an ontology, but the achieved semantics vary.

## RDF

> Resource Description Framework

RDF is a data-model used to define ontologies. It's a model that is domain-neutral, application-neutral, and it supports internationalization. RDF is an abstract model, and therefore not language-specific. XML is often used to define RDF data, but it is not a necessity.

### RDF model

The core of RDF is to describe resources, which basically are "things" in the world that can be described in words og relations to other resources etc. RDF consists of a lot of triples that together describe something about a particular resource. A triple consist of _(Subject, Predicate, Object)_, and is called a __statement__.

The __subject__ is the resource, the thing we'd like to talk about. Examples are authors, apartments, people or hotels. Every resource has a URI _(unique resource identifier)_. This could be an ISBN-number, a URL, coordinates, etc.

The __predicate__ states the relation between the subject and the object and are called property. For example Anna is a _friend of_ Bruce, Harry Potter and the Philosopher's Stone was _written by_ J.K. Rowling or Oslo is _located in_ Norway.

The __object__ is another resource, typically a value.

The statements are often modeled as a graph, and an example could be: _(lit:J.K.Rowling, lit:wrote, lit:HarryPotter)_.

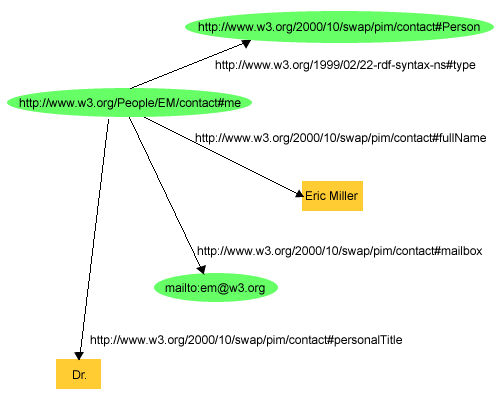

Below is a more complex example where you can see that _http://www.w3.org/People/EM/contact#me_ has the property _fullName_ defined in _http://www.w3.org/2000/10/swap/pim/contact_, which is set to the value of _Eric Miller_.

### Serialization formats

N-triples, Turle, RDF/XML, RDF/JSON.

N-triples

: It is plain text format for encoding an RDF graph.

Turtle

: Turtle can only serialize valid RDF graphs. It is generally recognized as being more readable and easier to edit manually than its XML counterpart.

RDF/XML

: It expresses RDF graph as XML document.

RDF/JSON

: It expresses RDF graph as JSON document.

## RDFS

> Resource Description Framework Schema

While the RDF language lets users describe resources using their own vocabulary, it does not specify semantics for any domains. The user must define what those vocabularies mean in terms of a set of basic domain independent structures defined by RDF Schema.

RDF Schema is a primitive ontology language, and key concepts are:

- Classes and subclasses

- Properties and sub-properties

- Relations

- Domain and range restrictions

### Classes

A class is a set of elements. It defines a type of objects. Individual objects are instances of that class. Classes can be structured hierarchical with sub- and super-classes.

### Properties

Properties are restriction for classes. Objects of a class must obey these properties. Properties are globally defined and can be applied to several classes. Properties can also be structured hierarchical, in the same way as classes.

## SPARQL

> SPARQL Protocol and RDF Query Language

RDF is used to represent knowledge, SPARQL is used to query this representation. The SPARQL infrastructure is a __triple store__ (or Graph store), a database used to hold the RDF representation. It provides an endpoint for queries. The queries follow a syntax similar to Turtle. SPARQL selects information by matching __graph patterns__.

SPARQL provides facilities for __filtering__ based on both numeric and string comparison. eg. FILTER (?bedroom > 2).

UNION and OPTIONAL are constructs that allow SPARQL to more easily deal with __open world__ data, meaning that there is always more information to be known. This is a consequence of the principle that anyone can make statements about any resource. UNION lets you combine two queries. eg. {} UNION {}. OPTIONAL lets you add a result to the graph IF the optional-part matches, else it creates no bindings, but continues (does not eliminate the other results).

There exists a series of possibilities for organizing the result set, among others: LIMIT, DISTINCT, ORDER BY, DESC, ASC, COUNT, SUM, MIN, MAX, AVG, GROUP BY.

UPDATE provides mechanisms for updating and deleting information from triple stores, and ASK (insted of SELECT) returns true/false instead of the result-set and CONSTRUCT (insted of SELECT) returns a subset of graph insted of a list of results. Can be used to construct new graphs

Entity and aspect-level sentiment classification wants to discover opinions about an entity or it's aspects. For instance we have the following sentence, "The iPhone’s call quality is good, but its battery life is short", two aspects are evaluated: call quality and battery lifetime. The entity is the iPhone. The iPhone's call quality is positive, but the battery duration is negative. It's hard to find and classify these aspects, as there are many ways to express positive and negative opinions, metaphors, comparisons and so on.

## Sentiment Lexicon and Its Issues

Some words can be identified as either positive or negative immediately, like good, bad, excellent, terrible and so on. There are also subsentences/phrases that can be identified as positive or negative. A list of such words or sentences is called a sentiment lexicon.

Use of just this is not enough. Below are several known issues:

1. Words and phrases can have different meaning in different contexts. For example, "suck" usually has a negative meaning, but can be positive if put in the right context: "This vacuum cleaner really sucks".

2. Sentences containing sentiment words sometimes do not reflect a sentiment. For example, "If this product X contain a _great_ feature Y, I'll buy it". _Great_ does not express a positive or negative opinion on the product X.

3. Sarcastic sentences are hard to deal with. They are mostly found in political discussions, e.g. "What an awesome product! It stopped working in two days".

4. Sentences without sentiment words can also express opinions. "This laptop consumes a lot of power.", reflects a partially negative opinion about the laptop, but the sentence is also objective as it states a fact.

## Opinion lexicon generation

## Aspect-based opinion mining

## Opinion mining of comparative sentences

## Opinion spam detection

## Unsupervised search-based approach

## Unsupervised lexicon-based approach

# Recommender systems

## Problem domain

Recommender systems are used to match users with items. This is done to avoid information overload and to assist in sales by guiding, advising and persuading individuals when they are looking to buy a product or a service.

Recommender systems elicit the interests and preferences of an individual, and make recommendations. This has the potential to support and improve the quality of a customers decision.

Different recommender systems require different designs and paradigms, based on what data can be used, implicit and explicit user feedback and domain characteristics.

## Purpose and success criteria

Different perspectives/aspects:

– Depends on domain and purpose

– No holistic evaluation scenario exists

Retrieval perspective:

– Reduce search costs

– Provide "correct" proposals

– Users know in advance what they want

Recommendation perspective:

– Serendipity – idendify items from the Long Tail

– Users did not know about existence

Prediction perspective:

– Predict to what degree users like an item

– Most popular evaluation scenario in research

Interaction perspective

– Give users a "good feeling"

– Educate users about the product domain

– Convince/persuade users - explain

Finally, conversion perspective

– Commercial situations

– Increase "hit", "clickthrough", "lookers to bookers" rates

– Optimize sales margins and profit

## Paradigms of recommender systems

There are many different ways to design a recommendation system. What they have in common is they take input into a _recommendation component_ and uses it to output a _recommendation list_. What varies in the different paradigms is what goes into the recommendation component. One of the inputs to get personalized recommendations is a user profile and contextual parameters.

_Collabarative recommender systems_, or "tell me what is popular among my peers", uses community data.

_Content-based recommender systems_, or "show me more of what I've previously liked", uses the features of products.

_Knowledge based recommender systems_, or "tell me what fits my needs", uses both the features of products and knowledge models to predict what the user needs.

_Hybrid recommender systems_ uses a combination of the above and/or compositions of different mechanics.

## Collaborative filtering

## Content-based flitering

## Semantic Vector space model