TDT4215: Web-intelligence

Curriculum

As of spring 2016 the curriculum consists of:

- A Semantic Web Primer, chapter 1-5, 174 pages

- Sentiment Analysis and Opinion Mining, chapter 1-5, 90 pages

- Recommender Systems, chapter 1-3, and 7, 103 pages

- Kreutzer & Witte: Opinion Mining Using SentiWordNet

- Turney: Thumbs Up or Thumbs Down? Semantic Orientation Applied to Unsupervised Classification of Reviews

- Liu, Dolan, & Pedersen: Personalized News Recommendation Based on Click Behavior

Semantic Web

The Semantic Web Vision / Motivation

The Semantic Web is an extension of the normal Web, that promotes common data formats and exchange protocols. The point of Semantic Web is to make the internet machine readable. It is not a matter of artificial intelligence; if the ultimate goal of AI is to build an intelligent agent exhibiting human-level intelligence (and higher), the goal of the Semantic Web is to assist human users in their day-to-day online activities.

Semantic Web uses languages specifically designed for data:

- Resource Description Framework (RDF)

- Web Ontology Language (OWL)

- Extensive Markup Language (XML)

Together these languages can describe arbitrary things like people, meetings or airplane parts.

Ontology

An ontology is a formal and explicit definition of types, properties, functions, and relationship between the entities, which result in an abstract model of some phenomena in the world. Typically an ontology consist of classes arranged in a hierarchy. There are several languages that can describe an ontology, but the achieved semantics vary.

RDF

Resource Description Framework

RDF is a data-model used to define ontologies. It's a model that is domain-neutral, application-neutral, and it supports internationalization. RDF is an abstract model, and therefore not language-specific. XML is often used to define RDF data, but it is not a necessity.

RDF model

The core of RDF is to describe resources, which basically are "things" in the world that can be described in words og relations to other resources etc. RDF consists of a lot of triples that together describe something about a particular resource. A triple consist of (Subject, Predicate, Object), and is called a statement.

The subject is the resource, the thing we'd like to talk about. Examples are authors, apartments, people or hotels. Every resource has a URI (unique resource identifier). This could be an ISBN-number, a URL, coordinates, etc.

The predicate states the relation between the subject and the object and are called property. For example Anna is a friend of Bruce, Harry Potter and the Philosopher's Stone was written by J.K. Rowling or Oslo is located in Norway.

The object is another resource, typically a value.

The statements are often modeled as a graph, and an example could be: (lit:J.K.Rowling, lit:wrote, lit:HarryPotter).

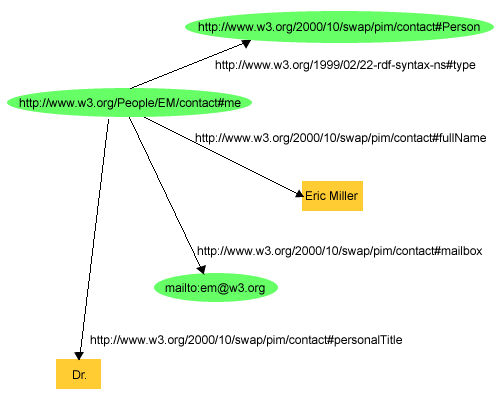

Below is a more complex example where you can see that http://www.w3.org/People/EM/contact#me has the property fullName defined in http://www.w3.org/2000/10/swap/pim/contact, which is set to the value of Eric Miller.

Serialization formats

N-triples, Turle, RDF/XML, RDF/JSON.

- N-triples

- It is plain text format for encoding an RDF graph.

- Turtle

- Turtle can only serialize valid RDF graphs. It is generally recognized as being more readable and easier to edit manually than its XML counterpart.

- RDF/XML

- It expresses RDF graph as XML document.

- RDF/JSON

- It expresses RDF graph as JSON document.

RDFS

Resource Description Framework Schema

While the RDF language lets users describe resources using their own vocabulary, it does not specify semantics for any domains. The user must define what those vocabularies mean in terms of a set of basic domain independent structures defined by RDF Schema.

RDF Schema is a primitive ontology language, and key concepts are:

- Classes and subclasses

- Properties and sub-properties

- Relations

- Domain and range restrictions

Classes

A class is a set of elements. It defines a type of objects. Individual objects are instances of that class. Classes can be structured hierarchical with sub- and super-classes.

Properties

Properties are restriction for classes. Objects of a class must obey these properties. Properties are globally defined and can be applied to several classes. Properties can also be structured hierarchical, in the same way as classes.

SPARQL

SPARQL Protocol and RDF Query Language

RDF is used to represent knowledge, SPARQL is used to query this representation. The SPARQL infrastructure is a triple store (or Graph store), a database used to hold the RDF representation. It provides an endpoint for queries. The queries follow a syntax similar to Turtle. SPARQL selects information by matching graph patterns.

Variables are written with a questionmark prefixed (?var), SELECT is used to determine which variable is of interest, and the query to be match is placed inside WHERE { ... }.

SPARQL provides facilities for filtering based on both numeric and string comparison. eg. FILTER (?bedroom > 2).

UNION and OPTIONAL are constructs that allow SPARQL to more easily deal with open world data, meaning that there is always more information to be known. This is a consequence of the principle that anyone can make statements about any resource. UNION lets you combine two queries. eg. {} UNION {}. OPTIONAL lets you add a result to the graph IF the optional-part matches, else it creates no bindings, but continues (does not eliminate the other results).

There exists a series of possibilities for organizing the result set, among others: LIMIT, DISTINCT, ORDER BY, DESC, ASC, COUNT, SUM, MIN, MAX, AVG, GROUP BY.

UPDATE provides mechanisms for updating and deleting information from triple stores, and ASK (insted of SELECT) returns true/false instead of the result-set and CONSTRUCT (insted of SELECT) returns a subset of graph insted of a list of results. Can be used to construct new graphs

Since schema information is represented in RDF, SPARQL can query this as well. It allows one to retrieve information, and also to query the semantics of that information.

Example query

Returns all sports, from the ontology, that contains the text "ball" in their name.

PREFIX dbpediaowl:<http://dbpedia.org/ontology/>

SELECT DISTINCT ?sport where {

?sport rdf:type dbpediaowl:Sport .

FILTER regex(?sport, "ball", "i") .

}

An online endpoint for queries can be found here.

OWL

Web ontology language

RDF and RDF Schema are limited to binary ground predicates and class/property hierarchy. We sometimes need to express more advanced, more expressive knowledge. The requirements for such a language is:

- well-defined syntax

- a formal semantics

- sufficient expressive power

- convenience of expression

- efficient reasoning support

More specific, automatic reasoning support, which is used to:

- check the consistency of the ontology

- check for unintended relations between classes

- check for unintended classifications of instances

There is a trade-off between expressive power and efficient reasoning support. The richer the logical formalism, the less efficient reasoning support, often crossing the border to decidability: reasoning on such logic is not guaranteed to terminate. Because this trade-off exists, two approaches to OWL have been made: Full and DL.

OWL Full

RDF-based semantics

This approach uses all the OWL primitives. It is great in the way that any legal RDF document is also a legal OWL Full document, and any valid RDF Schema inference is also a valid OWL Full conclusion. The problem is that it has become so powerfull that it is undecidable, there is no complete (efficient) reasoning support.

OWL DL

Direct semantics

DL stans for Descriptive Logic, and is a subset of predicate logic (first-order logic). This approach offers efficient reasoning support and can make use of a wide range of existing reasoners. The downside is that it looses full compability with RDF: a RDF document must be extended in some way to be a valid OWL DL document. However, every legal OWL DL document is a legal RDF document.

Three profiles, OWL EL, OWL QL, and OWL RL, are syntactic subsets that have desirable computational properties. In particular, OWL2 RL is implementable using rule-based technology and has become the de facto standard for expressive reasoning on the Semantic Web.

The OWL language

OWL uses an extension of RDF and RDFs. It can be hard for humans to read, but most ontology engineers use a specialized ontology development tool, like Protégé.

There are four standard syntaxes for OWL:

- RDF/XML

- OWL can be expressed using all valid RDF syntaxes.

- Functional-style syntax

- Used in the language spesification document. A compact and readable syntax.

- OWL/XML

- XML-syntax, allows us to use off-the-shelf XML authoring tools. Does not follow RDF conventions but closely maps onto function-style.

- Manchester syntax

- As human readable as possible. Used in Protégé.

Sentiment analysis

Sentiment analysis is about searching through data to get people's opinions about something. Sentiment analysis is also called opinion mining, opinion extraction, sentiment mining, subjectivity analysis, affect analysys, emotion analysis, or review mining.

Sentinemt analysis is important because opinions drive decisions. People will seek other people's opinions when buying something. It is useful to be able to mine opinions on a product, service or topic.

Businesses and organizations are interested in knowing what people think of their products. Individuals are interested in what others think of a product or service. We would like to be able to do a search like "How good is iPhone" and get a general opinion on how good it is.

With "explosions" of opinion data in social media and similar applications it is easy to gather data for use in decisionmaking. It is not always necessary to create questionnaires to get peoples opinions. It can, however, be difficult to extract meaning from long blogposts and arcticles, and make a summary. This is why we need Automated Sentiment analysis systems.

There are two types of evaluation:

- Regular opinions ("This camera is great!")

- Comparisons ("This camera is better than this other camera.")

Both can be direct or indirect.

The Model

A regular opinion are modeled as a quintuple:

(e, a, s, h, t) = (entity, aspect, sentiment, holder, time)

- Entity

- The target of the opinion, the "thing" which the opinion is expressed on. (camera)

- Aspect

- The attribute of the entity, the specific part of the "thing". (battery life)

- Sentiment

- Classification either as positive, negative or neutral, or a more granular representation between these. (+)

- Holder

- The opinion source, the one expressing the opinion. (Ola)

- Time

- Simply the datetime when this opinion was expressed. (05/06/16 12:30)

Example: "Posted 05/06/16 12:30 by Ola: The battery life of this camera is great!"

The task of sentiment analysis is to discover all these quintuples in a given document.

A framework of tasks:

- Entity extraction and categorization

- entity category = a unique entity

- entity expression = word or phrase in the text indicating an entity category

- Aspect extraction and categorization

- explicit and implicit aspect expression

- Implicit: "this is expensive"

- Explicit: "the price is high"

- explicit and implicit aspect expression

- Opinion holder extraction and categorization

- Time extraction and standardization

- Aspect sentiment classification

- Determine the polarity of the opinion on the aspect

- Opinion quintuple generation

.

Levels of Analysis

Research has mainly been done on three different levels.

Document-level

Document-level sentiment classification works with entire documents and tries to figure out of the document has a positive or negative view of the subject in question. An example of this is to look at many reviews and see what the authors mean, if they are positive, negative or neutral.

Sentence-level

Sentence-level sentiment classification works with opinion on a sentence level, and is about figuring out if a sentence is positive, negative or neutral to the relevant subject. This is closely related to subjectivity classification, which distinguishes between objective and subjective sentences.

Entity and aspect-level

Entity and aspect-level sentiment classification wants to discover opinions about an entity or it's aspects. For instance we have the following sentence, "The iPhone’s call quality is good, but its battery life is short", two aspects are evaluated: call quality and battery lifetime. The entity is the iPhone. The iPhone's call quality is positive, but the battery duration is negative. It's hard to find and classify these aspects, as there are many ways to express positive and negative opinions, metaphors, comparisons and so on.

There are two core tasks of aspect-based sentiment analysis: the aspect extraction, and the aspect sentiment classification. We'll start by looking at the latter.

Aspect Sentiment Classification

There are two main approaches to this problem; Supervised learning and lexicon-based.

Supervised learning. Key issue is how to determine the scope of each sentiment expression. Current main approach is to use parsing to determine the dependencies and other relevant information. Difficult to scale up to a large number of application domains.

Lexicon based. Some words can be identified as either positive or negative immediately, like good, bad, excellent, terrible and so on. A list of such words or sentences is called a sentiment lexicon. This approach has been shown to perform quite well in a large number of domains. Use a sentiment lexicon, composite expressions, rules of opinions, and the sentence parse tree to determine the sentiment orientation on each aspect in a sentence.

The algorithm:

1. Input

- "The voice quality of this phone is not good, but the battery life is long"

2. Mark sentiment words and phrases

- good [+1] (voice quality)

3. Apply sentiment shifters

- NOT good [-1] (voice quality)

4. Handle but-clauses

- long [+1] (battery life)

5. Aggregate opinions

- voice quality = -1

- battery life = +1

There are some known issues with sentiment lexicons. Orientations in different domains ("camera sucks", "vacum sucks"), sentences containing sentiment words may not express any sentiment ("which camera is good?"), sarcasm ("what a great car, stopped working in two days"), and sentences without sentiment words can also imply opinions (“This washer uses a lot of water”) (a fact, but using water is negative. A matter of identifing resource usage).

Aspect Extraction

Now we'll look at the other task of aspect-based sentiment analysis, extracting the aspects from the text. There are four main approaches for accomplishing this:

- Extraction based on frequent nouns and noun phrases

- Extraction by exploiting opinion and target relations

- Extraction using supervised learning

- Extraction using topic modeling

Below follows a brief description of each approach:

Finding frequent nouns and noun phrases. This method finds explicit aspect expressions bt using sequential pattern mining to find frequent phrases. Each frequent noun phrase is given an PMI (pointwise mutual information) score. This is a score between the phrase and some meronymy discriminators associated with the entity class.

Use opinion and target relations. Use known sentiment words to extract aspects as the target for that sentiment. e.g. "the software is amazing", amazing is a known sentiment word and software is extracted as an aspect. Two approaches exists: The double propagation method and a rule based approach.

Using supervised learning. Based on sequential learning (labeling). Supervised means that it needs a pre-labeled training-set. Most state-of-the-art methods are HMM (Hidden Markov Models) and CRF (Conditional Random Fields).

Using topic modeling. An unsupervised learning method that assumes each document consists of a mixture of topics and each topic is a probability distribution over words. The output of topic modeling is a set of word clusters. Based on Bayesian networks. Two main basic modelsare pLSA (Probabilistic Latent Semantic Analysis) and LDA (Latent Dirichlet allocation).

Two unsupervised methods

As described by two of the papers

Search-based

Turney: Thumbs Up or Thumbs Down?.

Lexicon-based

Kreutzer & Witte: Opinion Mining Using SentiWordNet.

Recommender systems

Recommender systems are used to match users with items. This is done to avoid information overload and to assist in sales by guiding, advising and persuading individuals when they are looking to buy a product or a service.

Recommender systems elicit the interests and preferences of an individual, and make recommendations. This has the potential to support and improve the quality of a customers decision.

Different recommender systems require different designs and paradigms, based on what data can be used, implicit and explicit user feedback and domain characteristics.

Paradigms of recommender systems

There are many different ways to design a recommendation system. What they have in common is they take input into a recommendation component and uses it to output a recommendation list. What varies in the different paradigms is what goes into the recommendation component. One of the inputs to get personalized recommendations is a user profile and contextual parameters.

Collabarative recommender systems, or "tell me what is popular among my peers", uses community data.

Content-based recommender systems, or "show me more of what I've previously liked", uses the features of products.

Knowledge based recommender systems, or "tell me what fits my needs", uses both the features of products and knowledge models to predict what the user needs.

Hybrid recommender systems uses a combination of the above and/or compositions of different mechanics.

Collaborative filtering

Pure collaborative approaches take a matrix of given user–item ratings as the only input and typically produce output of (a) a (numerical) prediction indicating to what degree the current user will like or dislike a certain item, and (b) a list of n recommended items. Such a top-N list should not contain items that the current user has already bought.

User-based nearest neighbor recommendation

Take ratings database and current user as input, identify other users that had similar preferences to those of the active user in the past. For all products not yet seen by the active user, predict the product based on ratings made by the peer users. This is done under the assumptions that: (a) if users had similar tastes in the past they will have similar tastes in the future, and (b) user preferences remain stable and consistent over time.

The best similarity metric for user-based nearest neighbor is Pearson's correlation coefficient. This returns a number between -1 and 1, describing the correlation (positive or negative).

where

Some challenges with user-based nearest neighbor is the computational complexity; it does'nt scale. How to deal with new items for which no ratings yet exists, called the cold start problem. And the fact that rating matrix is typically very sparse (see Sparsity).

Item-based nearest neighbor recommendation

Large-scale systems needs to handle handle millions of users and items and make it impossible to compute predictions in real time (user-based collaborative filtering won't do). The idea is to compute predictions using the similarity between items and not the similarity between users. Look at the collumns of the rating-matrix, rather than the rows.

The Cosine similarity measure finds the similarity between rating-vectors for two items, and ranges from 0 to 1. The adjusted cosine measure subtracts the user average from the ratings, and ranges from -1 to 1. The best similarity metric for item-based nearest neighbor is Adjusted cosine similarity.

where

Data can be preprocessed for item-based filtering. The idea is to construct the item similarity matrix in advance (pairwise similarity of all items). At run time, a prediction for a product and user is made by determining the items that are most similar to the product and by building the weighted sum of the users ratings for these items in the neighborhood.

Content-based filtering

In contrast to collaborative approaches, content-based techniques do not require user community in order to work. Most approaches for this type of filtering aim to learn a model of users interest preferences based on explicit or implicit feedback. Good recommendation accuracy can be achieved with this type of filtering with the help of machine learning techniques.

Content-based filtering has a lot in common with information retrieval, since one must extract aspects of the items to compare with the users preferences.

Similarity-based retrieval

Recommend items that are similar to those the user liked in the past

You can use the nearest-neighbor approach, check whether the user liked similar documents in the past, or a relevance feedback approach called Rocchio’s method, where the user gives feedback on whether the retrieved items were relevant. Both these build on the vector space model.

Vector space model

Items are represented as vectors, with their features as attributes with different weight. For example, a book can have the following vector for genres:

book = [fantasy=0.3, adventure=0.2, biography=0, ...]

The weights can be boolean or a number representation. How is this score for the genres derived? It could be by looking at all the words in book. Before such text classification is applied certain filters should be applied on the text, like stop-word removal, stemming, etc. (for more on this subject see the Information Retrieval-compendium).

A standard measure for the weights described above is the inverse document frequency (TF-IDF).

where

When both items and user preferences are represented as vectors we can compare their similarity. The most common metric is the adjusted cosine similarity described under Item-based nearest neighbor recommendation.

There are also other text classification methodes for retrieval, like supervised learning, Naive Bayes, Widrow-Hoff, Support Vector Machines, k nearest-neighbors, decision trees, and rule induction. (?)

Challanges of content-based filtering are the problem of deriving implicit feedback from user behavior. Also, recommendations can contain too many similar items. In addition, all techniques that apply learning-algorithms requires training data, and some can overfit this traning data.

Pure content-based systems are rarley found in commercial environments, but often combined with collaborative filtering in a hybrid approach. It could be used to cope with some of the limitations of CF, like the cold-start problem.

Evaluating Recommender Systems

What makes a good recommender system?

You can measure recommender systems after efficiency (with respect to accuracy, user satisfaction, responsetime, serendipity*, online conversion, ramp-up efforts, etc), wheter customers like or buy recommended items, or if customers buy items they otherwise would have not, or wheter they are satsfied with a recommendation after purchase.

*Serendipity - idendify items from the Long Tail, meaning items that are not recommended by the majority of users, but that the user might actually like.

Below follows a series of different metrics and, unfortunately, a lot of math..

Sparsity

Relates to how much of the ratings-matrix that is empty, being items not rated by users. It is calculated by:

where

Error Rate

Mean Absolute Error (MAE) computes the deviation between predicted ratings and actual ratings.

Root Mean Square Error (RMSE) is similar to MAE, but places more emphasis on larger devation.

Precision

A measure of exactness, determines the fraction of relevant items retrived out of all items retrieved. e.g. the proportion of recommended items that are actually good.

Recall

A measure of completness, determines the fraction of relevant items retrieved out of all relevant items. e.g. the proportion of all good items recommended.

F1

Combines precision and recall in a singe metric.

Rank Score

Extends the recall metric to take the position of correct items in a ranked list into account (the order of the recommendations matter). Defined as the ratio of the Rank Score of the correct items to best theoretical Rank Score achivable for the user.

where

Liftindex

Assumes that the ranked list is divided into 10 equal deciles

where

Discounted Cummulative Gain

Discounted Cummulative Gain (DCG) is a logarithmic reduction factor (?).

where

Idealized Discounted Cummulative Gain (IDCG) assumes that items are ordered by decreasing relevance.

Normalized Discounted Cummulative Gain (nDCG) normalizes to the interval [0,1].

Average Precision

This is a ranked precision metric that places emphasis on highly ranked correct predictions (hits). It is the average of precision values determined after each successful prediction.

Given this result:

| Rank | Hit? |

| 1 | X |

| 2 | |

| 3 | |

| 4 | X |

| 5 | X |